Metrics to measure effectiveness of QA testing services

In the rapidly evolving field of software development, technological innovations are revolutionizing quality assurance – QA testing processes. These advancements aren’t only enhancing the efficiency and accuracy of tests but also reshaping how businesses approach software quality. Now, let’s explore some of the most impactful technological innovations and metrics in QA testing that are setting new standards in the industry.

Embracing technological advancements in QA testing

Automation through Artificial Intelligence (AI) and machine learning

One of the most significant shifts in QA testing has been the integration of Artificial Intelligence (AI) and Machine Learning (ML). These technologies enable automated testing solutions that can learn from data, predict outcomes, and adapt to changes without human intervention. AI-powered tools can analyze vast amounts of test data to identify patterns that would be impossible for human testers to detect. This capability allows for predictive testing. Potential future defects are flagged based on historical data, significantly reducing the risk of major issues post-launch. This targeted testing approach not only saves time but also allocates resources more effectively, enhancing the overall efficiency of the QA testing process.

For example, a software company developing financial planning tools uses AI to refine its test automation strategy. By analyzing past test data, the AI model identifies high-risk areas within the software that are prone to defects based on changes in code and user behavior patterns. This approach enables the QA team to prioritize testing efforts on these high-risk areas before each release, dramatically reducing the number of defects encountered by end users and speeding up the development cycle.

Enhanced test automation with Robotics Process Automation (RPA)

Robotics Process Automation (RPA) has taken test automation a step further by enabling the automation of repetitive and rule-based tasks that were previously performed manually. RPA tools can simulate user interactions with a software application, performing a wide range of test scenarios more rapidly than human testers. This not only speeds up the testing cycles but also reduces the chances of human error, ensuring more accurate results. RPA can automate complex workflows within a software application for regression testing. This is especially useful in applications where updates are frequent and can affect multiple interconnected systems.

.png)

For example, an insurance company employs RPA to automate its claims processing software tests. The RPA bots are programmed to mimic end-user actions, such as filling out claim forms, uploading documents, and initiating claims processing. This not only tests the functionality under real-world scenarios but also checks the performance and stability of the system under load. By automating these repetitive tasks, the company significantly reduces manual testing hours and improves accuracy in test results.

Cloud-based testing environments

Cloud computing has introduced a new paradigm in QA testing by providing scalable, flexible, and accessible testing environments. Cloud-based testing platforms allow teams to deploy and manage testing resources more efficiently. They can scale up or down based on the project requirements, which is cost-effective and decreases the time-to-market for software products. Utilizing cloud environments, QA teams can simulate thousands of virtual users interacting with applications to perform load and stress testing without the need for expensive physical infrastructure.

For example, a multinational corporation with a suite of e-commerce applications utilizes cloud-based testing to manage its global QA needs. By leveraging a cloud platform, the QA team can instantly deploy their testing environments in multiple regions simultaneously to test localization settings and multi-regional performance. This ensures that the application provides a consistent user experience worldwide and adheres to local compliance requirements, all while maintaining a flexible, cost-effective testing process.

Integration of IoT and QA testing

As the Internet of Things (IoT) continues to expand, QA testing has had to evolve to address the challenges posed by the interconnected nature of IoT devices. Testing in an IoT environment requires not only software but also hardware, network, and security testing, which can be highly complex. Technological innovations have enabled more sophisticated testing techniques that can handle the diverse and integrated nature of IoT applications. Specialized tools for IoT QA testing can automate the simulation of real-world IoT environments, where multiple devices with different operating systems and hardware configurations interact with each other.

For example, a home automation company develops a new smart thermostat that interacts with various other smart devices like lights and security cameras. To ensure seamless integration and performance, the QA team utilizes an IoT testing platform that simulates a smart home environment, interconnecting these devices. The platform allows them to test various scenarios, such as the thermostat’s response to a security breach or power outage, ensuring that all devices behave as expected under different conditions.

Read more: Enhancing product quality with QA testing services

Blockchain for advanced security technologies

Blockchain technology is increasingly being explored for its potential in improving the security aspects of QA testing. By leveraging blockchain, QA processes can ensure data integrity, security, and traceability. This is particularly crucial for applications that handle sensitive data, such as in finance and healthcare. Implementing blockchain helps create decentralized applications. Each transaction or test result is recorded on a secure, immutable ledger. This enhances the transparency and security of the testing process.

Example: A healthcare application that manages patient records and appointment scheduling integrates blockchain to enhance its security testing framework. A decentralized ledger logs each patient record transaction, offering tamper-proof security and traceability. During QA testing, testers verify the integrity of blockchain transactions. They ensure no one can make unauthorized changes to the records and that all access logs remain immutable. This approach not only secures patient data but also builds trust among users regarding the application’s reliability and safety.

Which are QA testing metrics to measure effectively?

Defect detection rate (DDR)

Defect Detection Rate (DDR) measures the percentage of identified defects during testing compared to the total number of actual defects in the software. This metric evaluates the testing process’s effectiveness. The purpose of DDR is to ensure that most defects are detected and resolved before release.

Formula:

-QA-testing-metrics.png)

- High DDR: indicates quick and efficient defect resolution.

- Low DDR: suggests many defects remain undetected, leading to potential delays in fixing issues.

When discussing DDR, another key term to know is Defect Removal Efficiency (DRE). DRE measures the effectiveness of the defect elimination process during testing phases. It provides a comprehensive view of a QA testing service’s ability to detect and fix errors before release. It’s important to monitor both DDR and DRE to ensure high standards. High values in these metrics indicate that you can deliver a high-quality product to your customers.

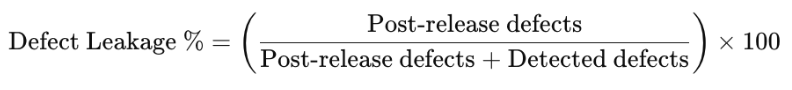

Defect leakage

Defect leakage refers to the percentage of errors not detected during testing phases but found after the product is released or used. The goal is to minimize this rate to enhance product quality and testing efficiency. A low defect leakage rate indicates that the testing process effectively identified and addressed errors before product launch, reflecting high quality and thoroughness in testing procedures.

Formula:

- High defect leakage: indicates many errors were discovered post-release, suggesting poor product quality, impacting customer satisfaction and business reputation.

- Low defect leakage: signifies fewer post-release issues, denoting a high-quality product.

This metric is crucial in sectors like finance, healthcare, and cybersecurity,… In this industry, software faults can result in significant losses or severe safety issues.

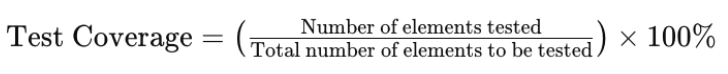

Test coverage

Test Coverage is a crucial metric in software testing that measures the percentage of tested system components relative to the total available components. For example, when developing a mobile application that allows users to book movie tickets, the app will likely feature functionalities such as account creation, movie selection, seat selection, payment processing, and movie reviews. To ensure this app functions smoothly and is free of defects, it’s essential to test all these features comprehensively. This ensures each function performs as expected under various scenarios and usage conditions.

Test Coverage is typically defined by three main categories:

- Line coverage: Percentage of code lines executed during testing.

- Branch coverage: Percentage of control structure branches (like if, switch statements) tested.

- Requirement coverage: Percentage of business requirements tested.

Formula:

Monitoring and analyzing test coverage provides developers with a clear understanding of the effectiveness of the testing process. This insight allows for necessary adjustments to the testing plan to enhance the final product’s quality. Overall, test coverage is an essential tool for optimizing and assessing the quality of software testing, ensuring that all aspects of the application are thoroughly verified and meet the expected standards.

Mean time to detect (MTTD)

Mean Time to Detect (MTTD) is a metric used to measure the average time from the occurrence of an error to its detection. This measurement is crucial for evaluating the effectiveness of monitoring and response processes in systems or applications. MTTD is particularly important in environments where timely response to incidents is critical to maintaining system integrity and performance.

Formula:

-QA-testing-metrics.png)

Monitoring and improving Mean Time to Detect (MTTD) is critical for software management and maintenance, particularly in high-reliability environments like online banking services, financial transaction systems, or healthcare applications. Organizations strive to reduce MTTD to swiftly address any incidents, thereby maintaining a seamless and secure user experience. This ensures that system downtime is minimized and that user disruptions are handled efficiently.

In conclusion, the continuous evolution of QA testing through technological innovations such as AI, ML, RPA, cloud environments, IoT integration, and blockchain significantly enhances the efficiency, accuracy, and security of software testing processes. These advancements not only streamline testing operations but also enable more precise defect detection and predictive testing capabilities, which are crucial for delivering high-quality software. This strategic approach to QA testing is essential for maintaining competitive advantage and achieving long-term business success.